Boost Digital Product Success by Mastering User Testing

- Product Design /

- Product Management /

As a digital product owner or product manager you may have direct or indirect experience with user testing in some form or fashion. Typically, following the user research process, user testing incorporates applying your knowledge and testing a hypothesis, idea, or concept that you or your team may have about a digital product. The value of user testing is not only in validating these discoveries, but more critically to provide an understanding of what can improve the user experience. Without substantiated validation, you’re likely to miss an opportunity to create and refine a digital product that accommodates user needs and expectations. This can lead to abandonment of your product in search for one that aligns with the user’s needs.

The process for user testing distills down to two essential ingredients. The first is ensuring that the elements that are tested facilitate the appropriate engagement of interaction to be contextually relevant and applicable. The second is asking the most optimal questions to obtain answers that provide the best and most actionable insights. When your user is able to easily understand, perform, and achieve the requested tasks they are able to provide thoughtful and insightful responses.

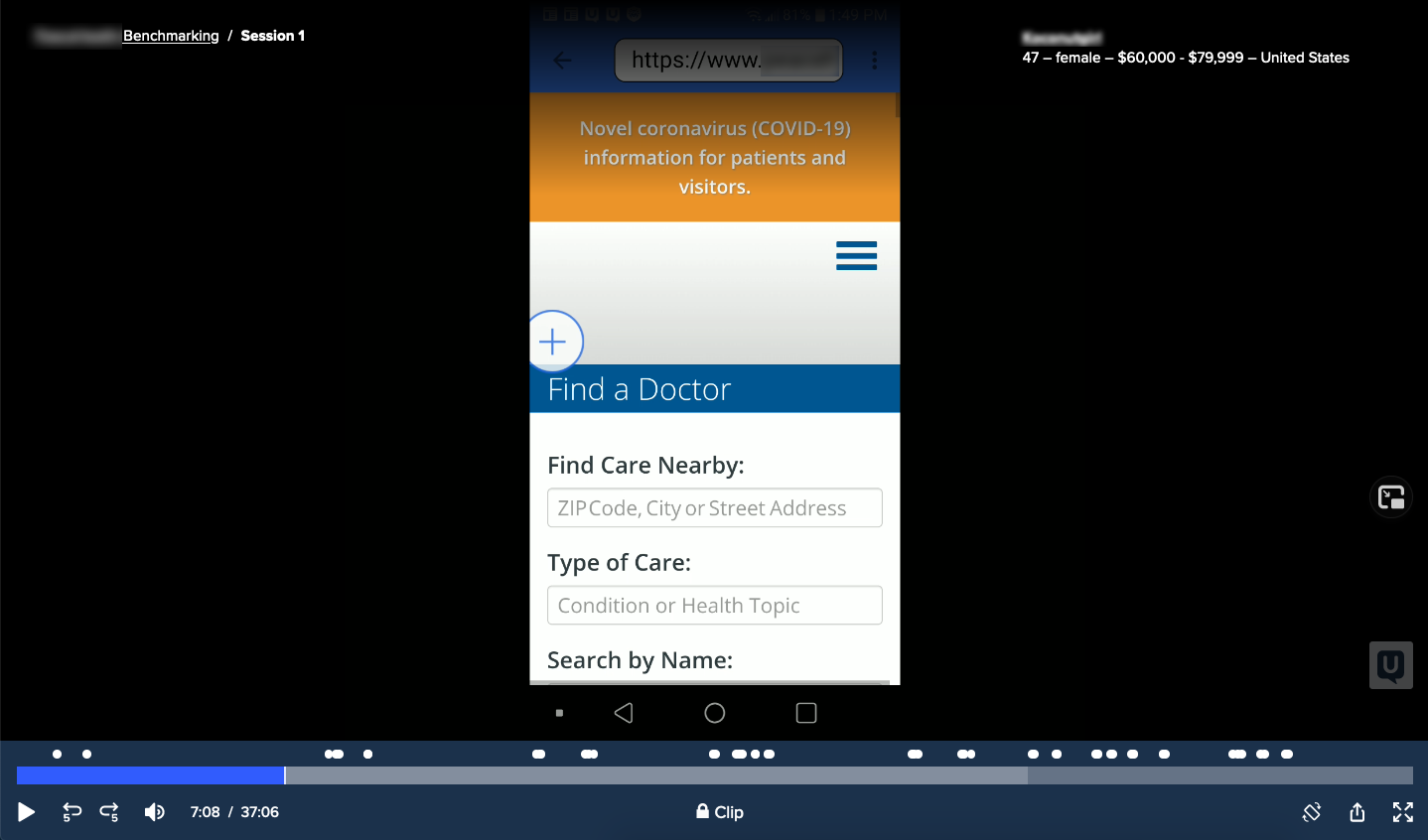

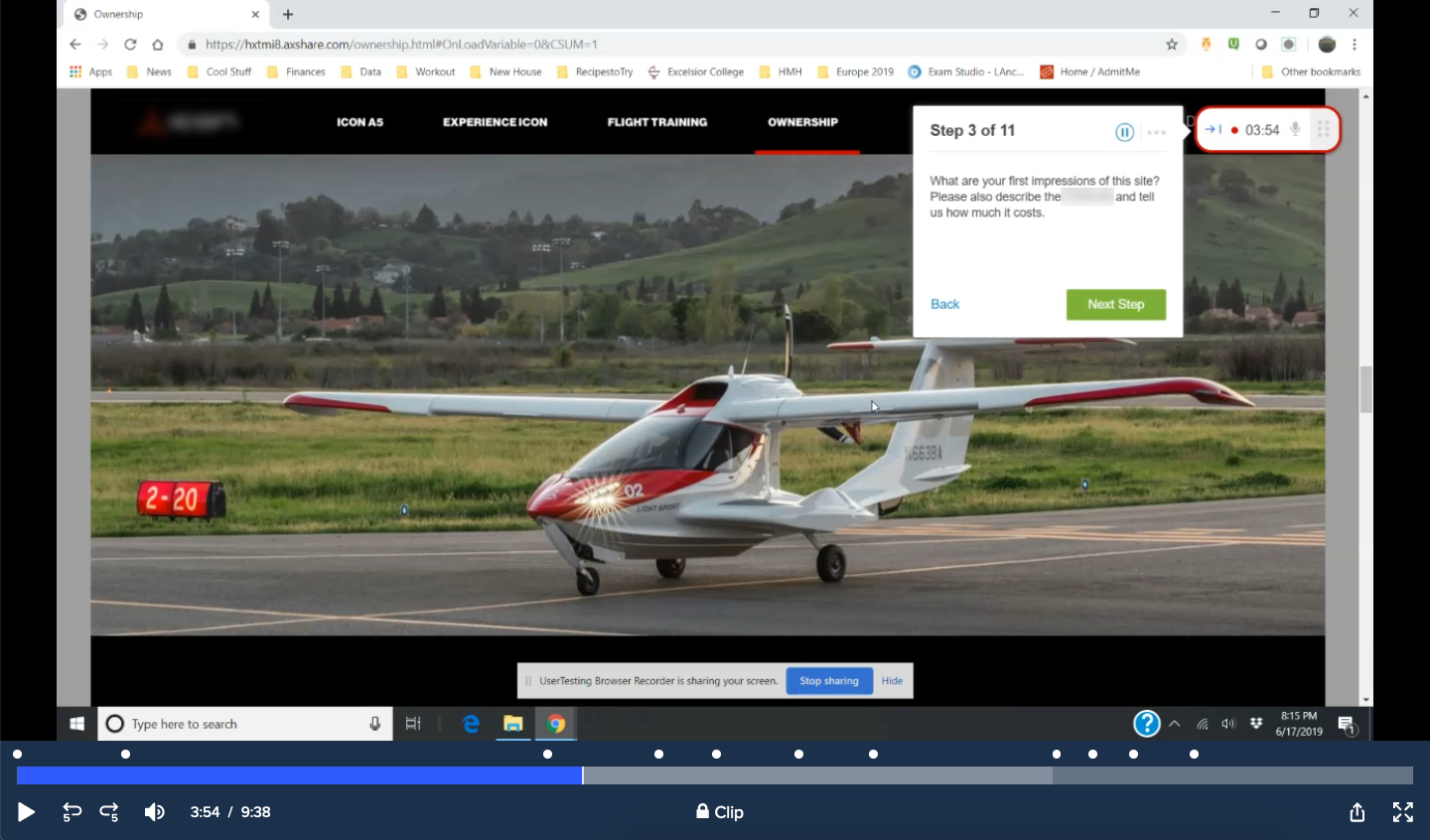

We have a companion article that can help you decide whether moderated or unmoderated user testing would be best suited for your project and needs. Moderated user testing involves asking users questions directly versus unmoderated user testing where users take tests on their own time, without you or your team present. Typically the latter is done through a paid testing service platform like Usertesting.com.

In this article, we’ll delineate fundamental necessities for user interactivity and highlight how best to format user testing questions. Regardless of which user testing protocols you choose to implement, you will be able to attain actionable insights and propel your digital product to the next level.

Setting Your Sights: Determining Goals and Expected Outcomes in User Testing

Before we begin, it’s absolutely essential to have goals and/or intended outcomes clearly specified as you go into the user testing process. Start by clarifying the purpose of the user testing process. What specific insights do you want to assemble? What hypotheses do you want to test against? Clearly define your user testing goals to ensure that everything that follows aligns with your objectives. Typically, goals and outcomes are shaped by a mixture of qualitative and quantitative user provided data.

Qualitative data is often descriptive and rich in detail, focusing on the quality and characteristics of what is tested. Users will tell you how they feel performing an action, whether it is good, bad, or neutral. This kind of information can give you a deeper understanding of what your customers are thinking and feeling as they go about their tasks.

Quantitative data on the other hand measures the success or failure of a given user task. Three out of ten participants may not continue past a certain part of the user test because a required interaction was too difficult or didn’t make sense to them.

Capturing the specific goals and outcomes of user testing will help keep you, your team, and your stakeholders in alignment. You’ll be able to ensure that testing data is well received and acted upon within your organization.

Unpacking User Testing: How to Decide What You’re Evaluating

Now that you have your goal and/or outcome outlined, it’s time to specifically define what you are going to test. Typically, you’ll either be testing an existing platform or building a prototype that contains a certain level of interactivity to capture the most essential information and data you need from users.

Existing Platform User Testing

Existing platform user testing assesses digital properties or prototypes in their current unaltered state to provide insight from users around the experience itself and collect early-stage quantitative and qualitative feedback. This will unveil the pain points that your customer is experiencing directly. For instance, the navigation may be functional code-wise but hard to use or clearly understand. Having critical pieces of information like this prior to roll-out can help create better solutions for your customers.

Prototype User Testing

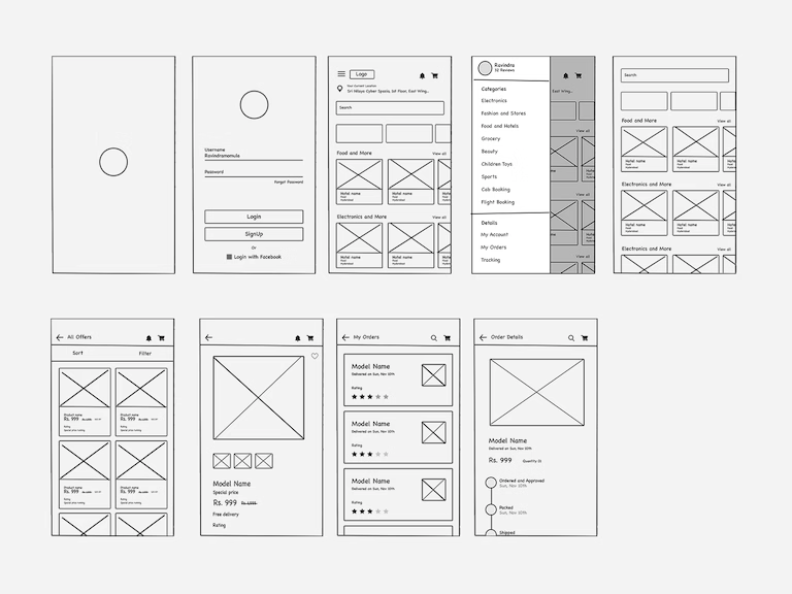

If what you want to test doesn’t yet exist or is still being created or ideated upon, you’ll need to build a prototype so users can give you quantitative or qualitative feedback. This can be daunting if you don’t yet have an internal product design team to handle this process or are in the process of building your team. If you need assistance, Emerge is happy to help.

The level of visual polish or interactivity that your prototype(s) will require depends largely upon the goal or outcomes you’ve detailed at the onset. Generally, you don’t need extensive visual polish to gather quantitative data since it’s measuring whether users pass or fail a task. Conversely, qualitative data may be influenced by visual polish. Qualitative data is reflective of how the user is feeling about their experience. Cumulatively, from both an aesthetic and usability perspective.

In the above example, a quantitative question in the user testing process may ask for the user to navigate to a specific screen in a mobile application. Since the objective of the question is whether the user can simply accomplish the task, a high level of visual polish is not a requirement. Typically the production of the above is within the wheelhouse of a talented UX designer and is not a time consuming process to produce.

If the question however were to ask the user what they think of the brand or how the experience makes them feel (as in the above example), a higher level of visual polish would be required as the experience is now being judged upon its appearance and aesthetics. Bringing in a UI designer makes more sense when you have questions that focus on these aspects. Keep in mind that making a prototype pixel perfect does take time and effort – far more than what is necessary for a low level of visual polish.

Elevating User Experience by Asking Users the Right Questions

Once your goals and outcomes are clearly defined and your visual examples are chosen for testing purposes, it’s time to frame your questions for users accordingly. In order to obtain actionable insights that you need to facilitate changes and improvements to your digital product, the users must understand what is being asked of them.

Formulate an Introduction

In order to prepare users to answer the questions that you have for them, you first need to create an introduction that puts them in the right frame of mind and provides them with the appropriate context to begin. Are these users patients in a hospital setting? Or are they truck drivers utilizing a mobile application on the road? Whether you’re involving users from a specific industry or demographic or just pulling from a randomized pool of users – a proper introduction will help them provide you with the information you’re seeking.

When utilizing a prototype to gather information, it’s also best practice to ensure users understand the nature of such a potentially limited digital experience, especially if the testing is unmoderated. For example, mentioning the following up-front can help users understand the boundaries and general expectations you have from them while taking a user test.

“Today you will be interacting with a prototype of a website to perform a number of specific tasks. Not everything will be functional in this prototype. If you encounter anything that does not work, tell us what you would expect to happen. If you like or dislike anything about the experience as you interact with it, please mention it.”

Asking the Questions

The core framework of a well assembled user testing experience captures individual scenarios, tasks, key metrics, and follow-up questions. For each variable you want to test and get feedback on from users, consider the explanations and example below:

Scenario: This gives the user their mindset for the task they are about to attempt to complete. It should be formatted as – I am [a specific user role], and must [perform a desired action] to [achieve a specific outcome].

Example: I am a patient in a hospital and must get to my doctor appointment on time so they can explain my lab results directly to me.

Task: This is the function you wish to have the user perform. It should be formatted as – Show us how you would [get to the specific outcome].

Example: Show us in this patient mobile application experience how you would navigate to your doctor.

Success Metric: This is how you know whether or not the task was successfully executed. It should be formatted as – User performs [actions that lead to completed success criteria].

Example: User brings up their appointment in the application, and uses the navigation tool to find their doctor successfully.

Follow-up questions: These can help you target tangentially related questions regarding a users’ experience as they go about their tasks.

Did you notice _______?

What did you expect _______ to do?

Do you think _______ should be different?

Did anything happen that you did not expect?

How would you describe _______ to a colleague?

As you can see with the above, context is key. You need to make things as easy as possible for your test users to understand why they’re performing a task as well as what you expect them to do. If you don’t provide these critical details, users may get frustrated during the testing process and skip questions or abandon the user test entirely.

Additionally, an important factor to consider is who is taking your test. Are you sampling random users, or are you targeting specific industry individuals? The former is better for general usability and quantitative testing, while the latter will provide richer detail through additional quantitative test questions.

Wrapping Up, Now You Take the Test!

Now that you have your goals and outcomes defined, an existing platform or prototype to test, and a series of scenarios, tasks, key metrics, and follow-up questions for your users to go through, it’s time to take the test yourself to determine how easy it is. Additionally, it’s also prudent to have someone else in your organization take the test. Whether they understand the nature of your digital product is largely dependent on whether you are wanting general usability and/or quantitative data versus acquiring industry specific qualitative detail.

It can be daunting to approach the user testing process, but there is no better way to understand if your digital product is actually working or if users are running into roadblocks. Your internal stakeholders may feel like they know the audience well enough that it’s not a process that is necessary. But, if they are not users of the product themselves, how would they know? It’s time to ask your users what they think, and let the data speak for itself.

If you have questions about the user testing process or conducting test, please reach out to Emerge. Emerge is a leading UX design agency. We thrive on collaborating with our clients.

Colleen Murphy, Copy Editor